Assessing models Method 1 Using Downloaded Models

You can only do this after setting up Ollama and Docker.

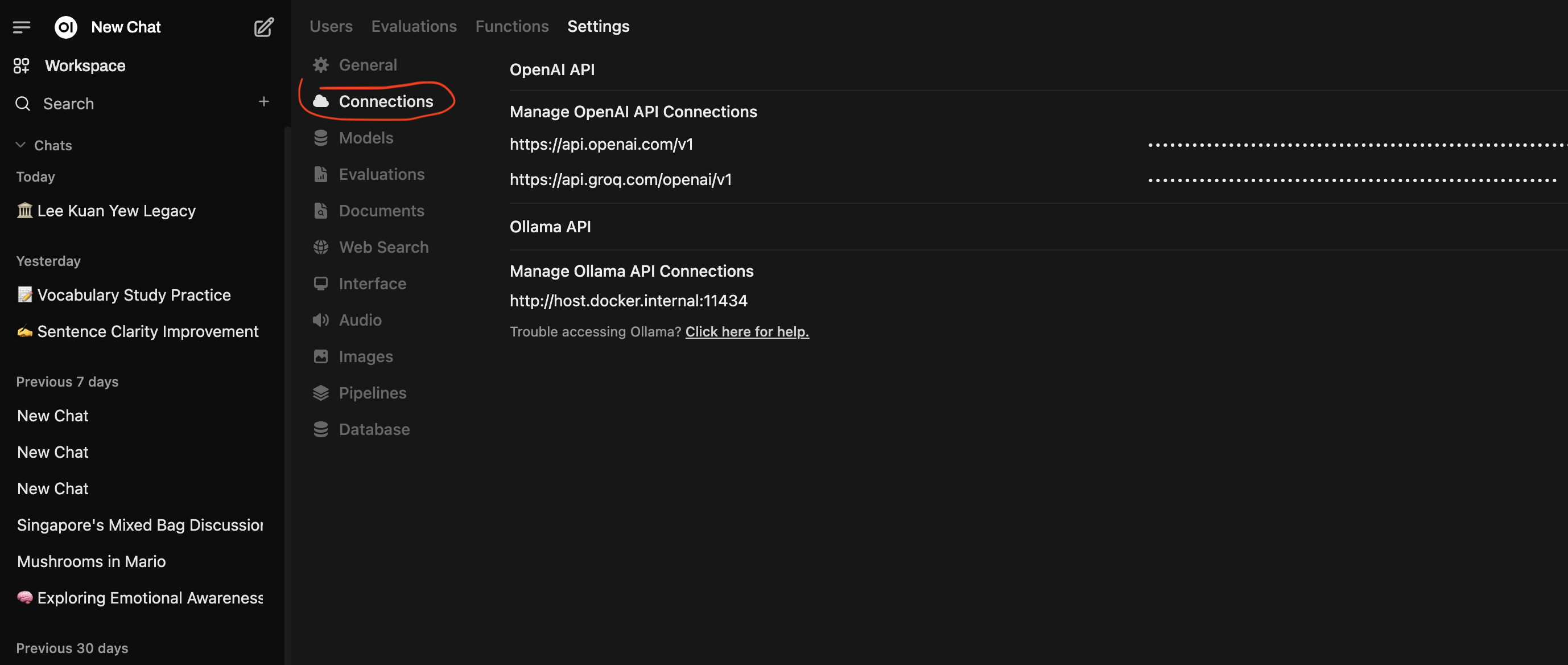

So how can we start playing with LLM using this setup? We will learn during this course three ways to access LLMs on OpenWeb UI. See Figure 1.

The first method is to download the models directly through Ollama. We learn this method in this page. Second method is here. Third method is here.

Method 1 is likely for LLMs that are smaller in size and your computer has adequate computing resources (e.g., GPU? Ram?) to handle the models. Method 2 will be for large models like Open AI models. Method 2 may costs $ to access because of API call rates.

We will learn Method 1 in this guide.

Method 1: Access LLMs using Ollama

I will provide steps on Way 1. You can also watch the video Video 1 starting at about 2:10s on how to download a LLM model using Ollama on OpenWEB UI.

Step 1: Log In to OpenWeb UI

Open up Ollama and Docker

- Open up Ollama.

- Open Docker which you should have already set up previously here.

- Check it is square symbol and you can click on the numbers to open up OpenWEB UI in a local host 3000

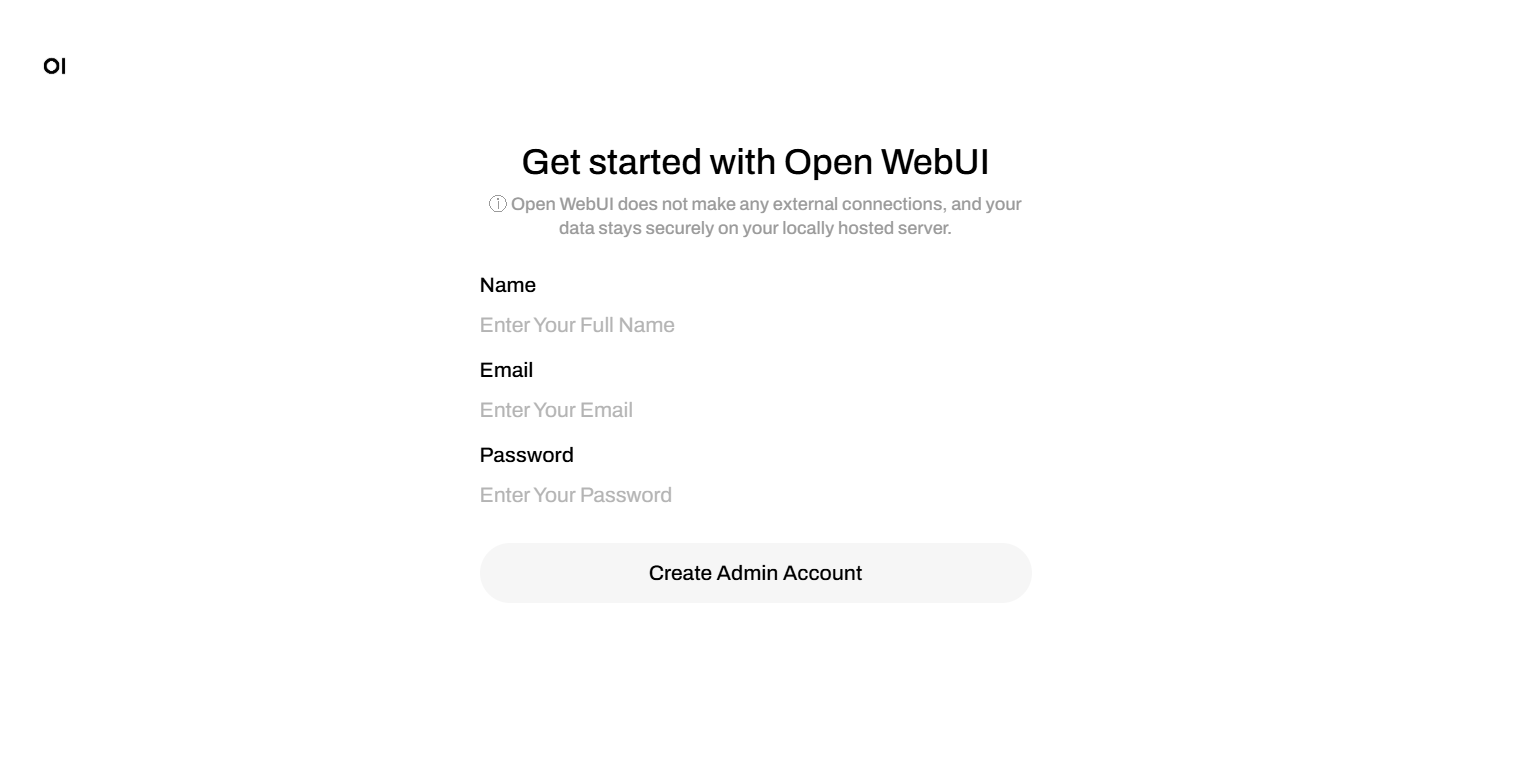

If this is the first time you are logging in, you are the administrator. Create your own password.

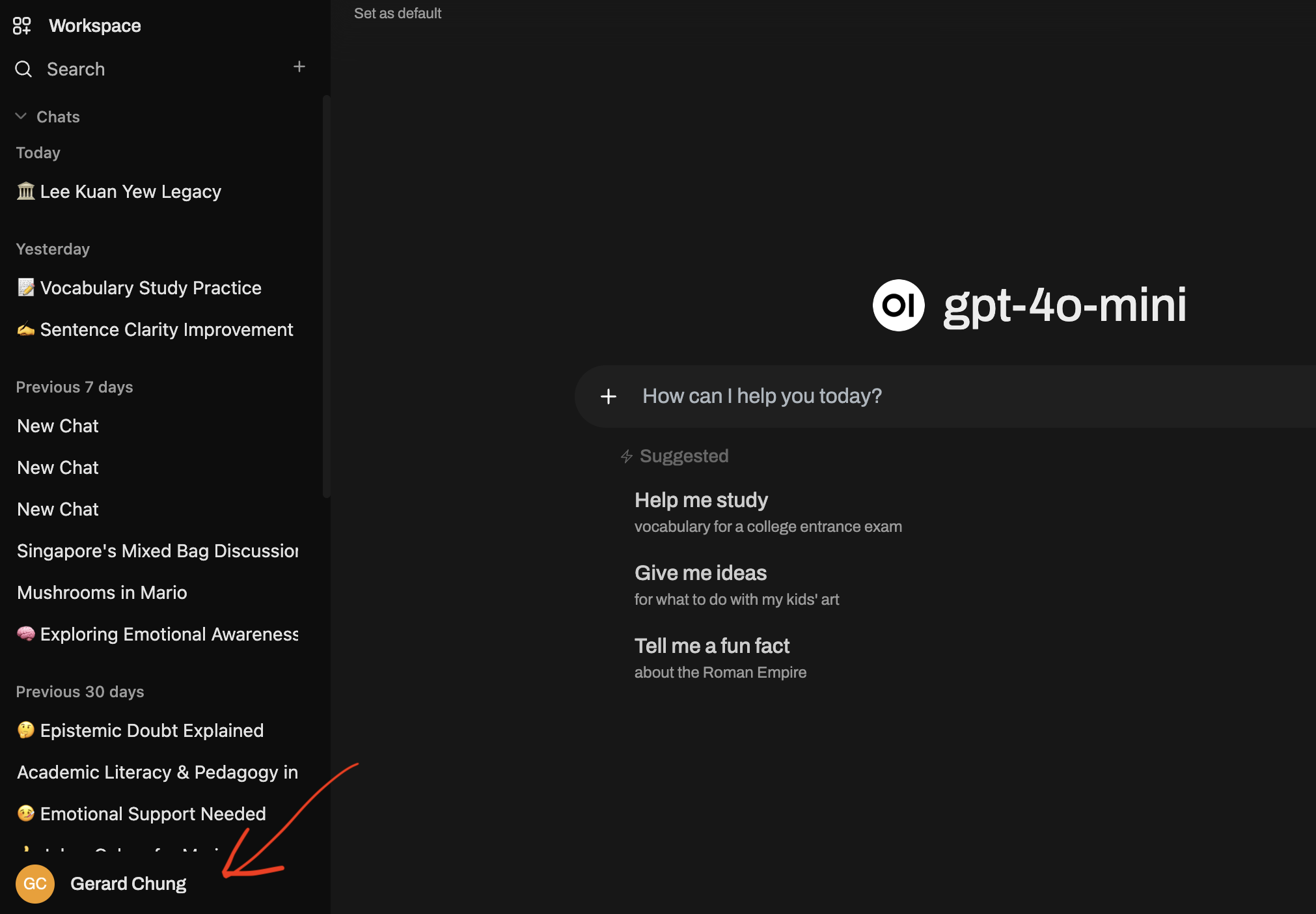

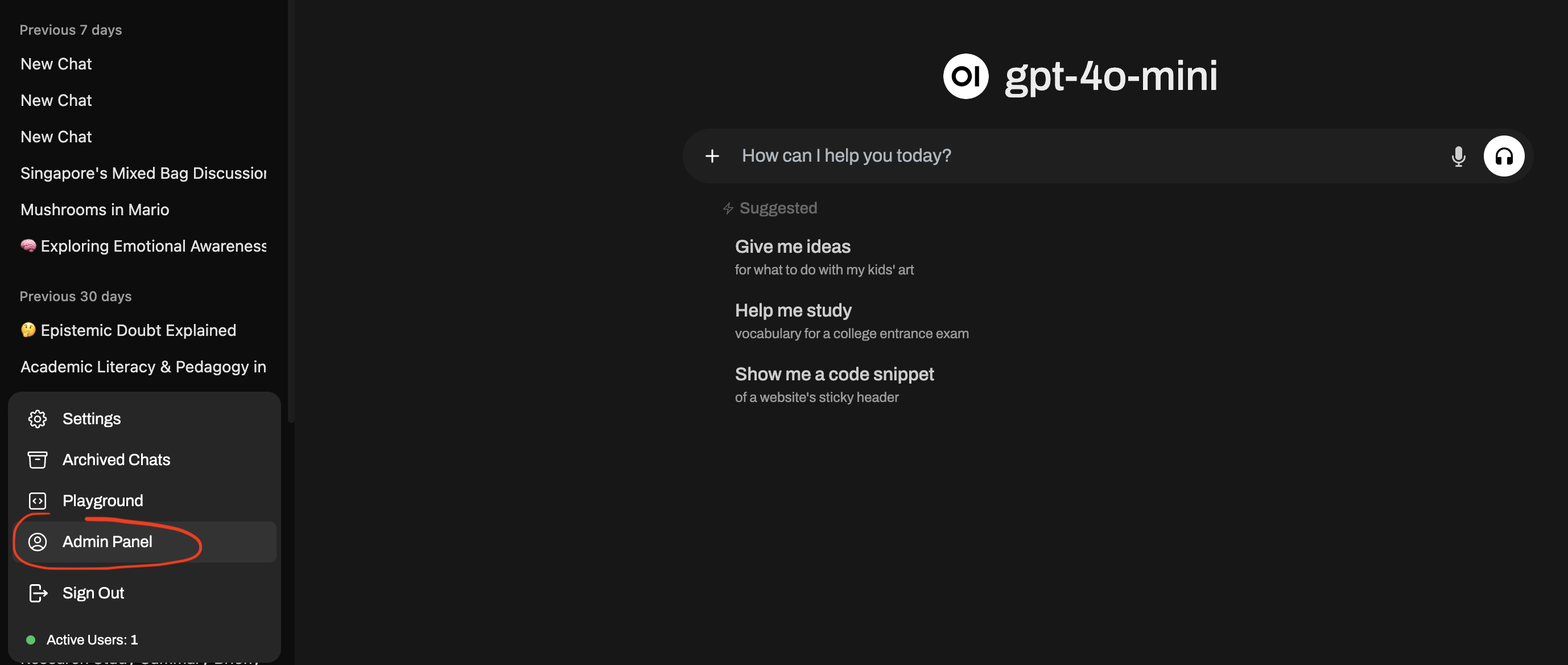

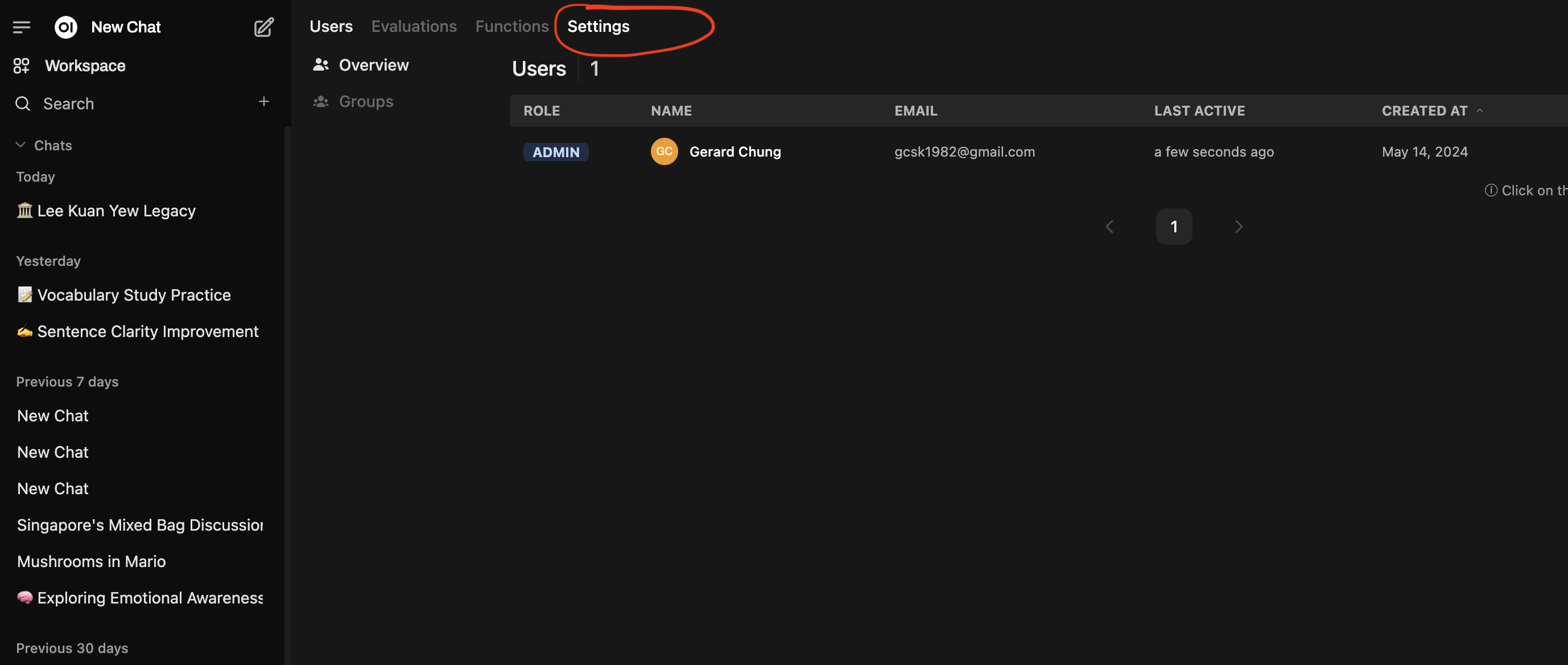

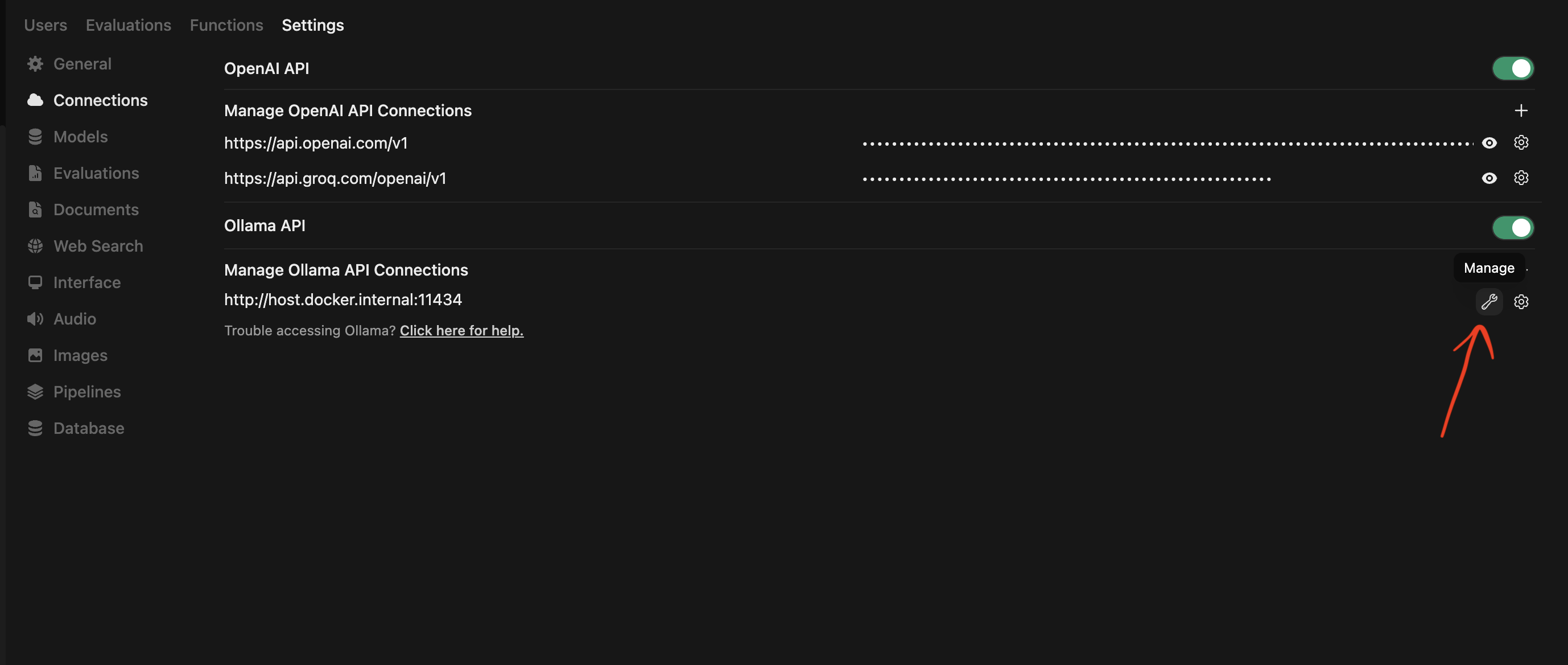

Step 2: Get to Settings to input model tag

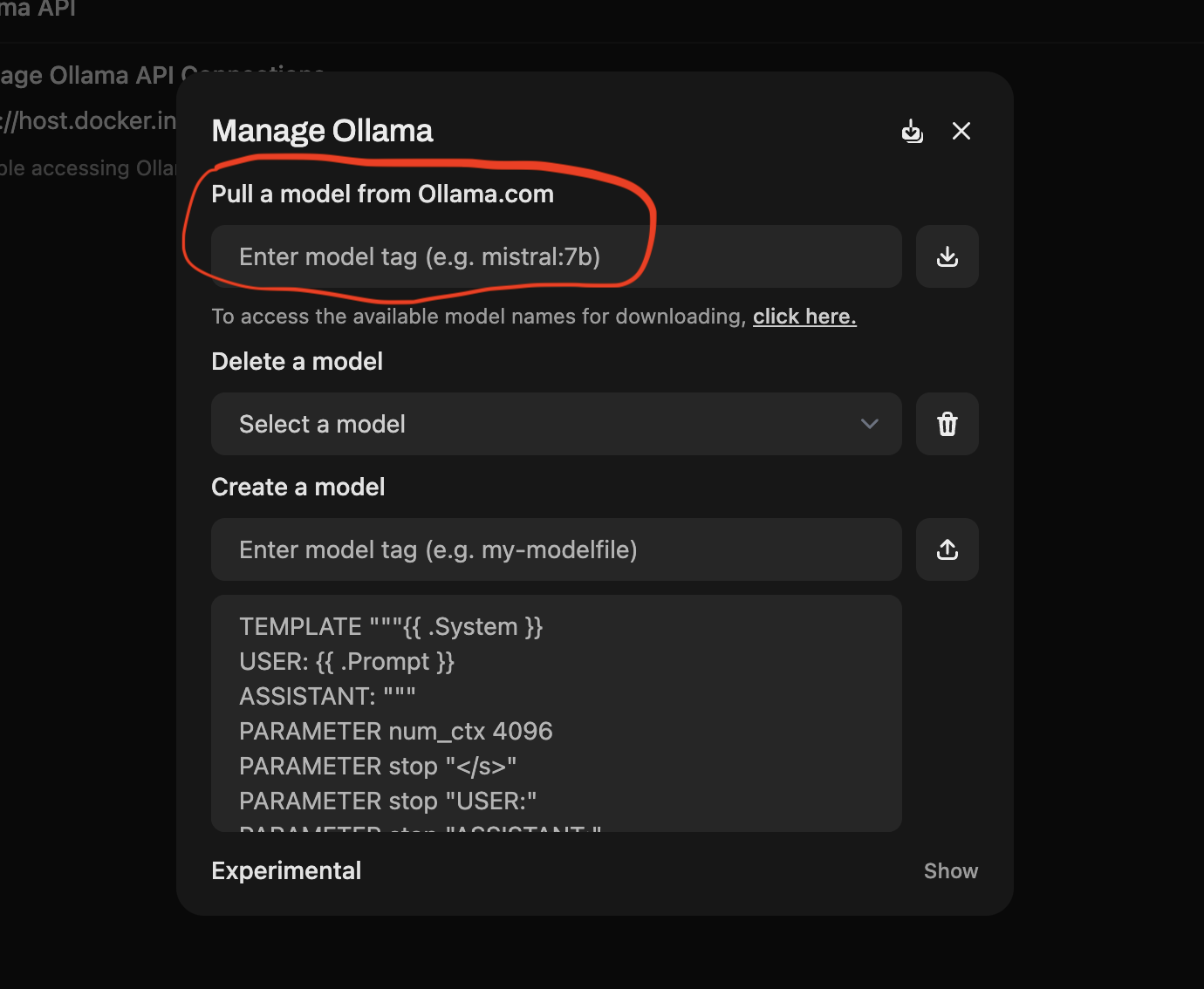

When you first set up, you will not have any LLM downloaded. So we will download a small LLM through Ollama using OpenWEB UI.

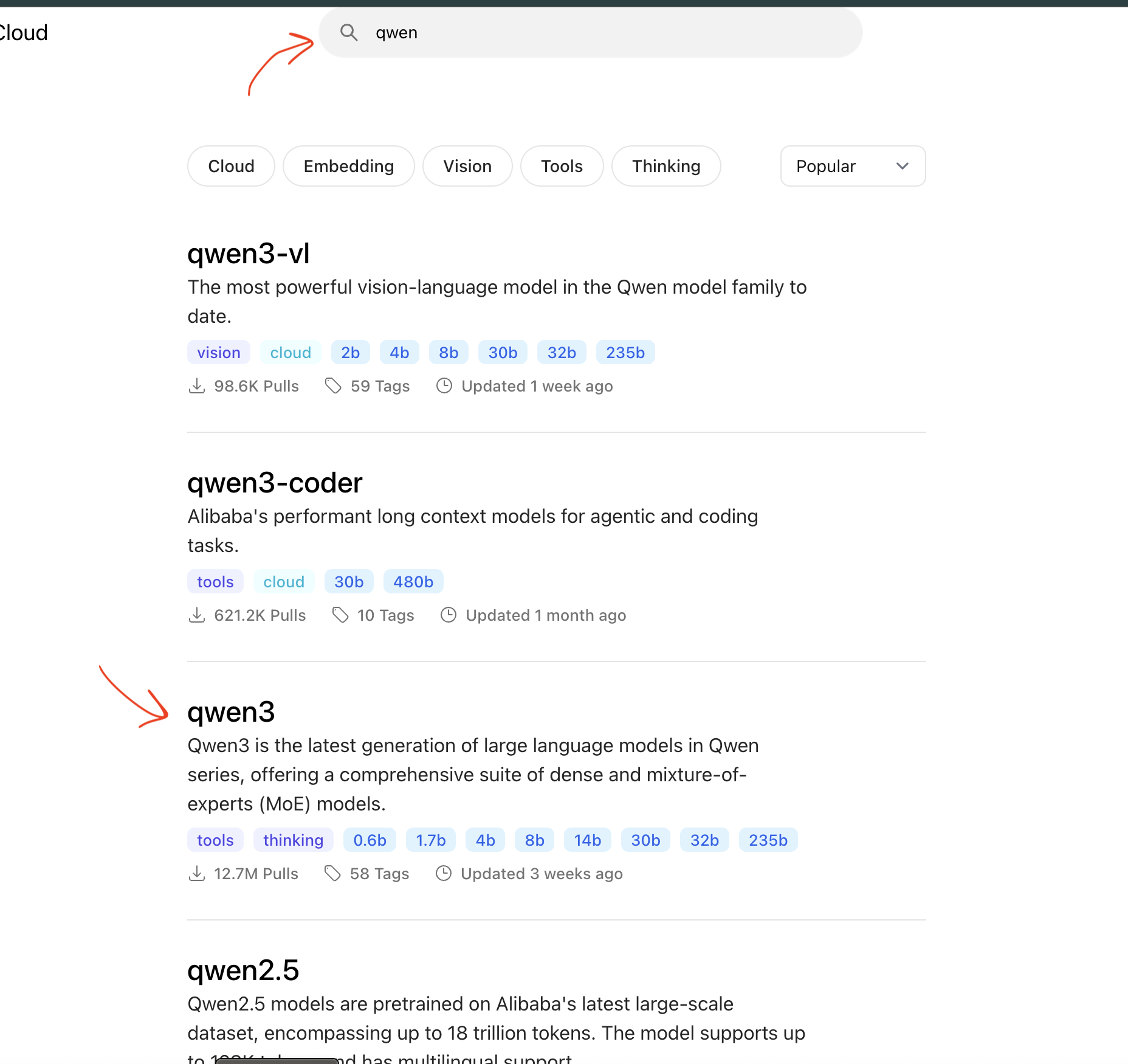

Step 3: Go to the Ollama website to get the model tag for the LLM you want to use

- Go to https://ollama.com/search or Ollama website -> “Models”

- Find and select the model you want. Likely your laptop does not have a GPU and has limited memory, go only for small models (i.e. those 1GB or less).

Step 4: Select a small model”.

If you had followed the “Download Ollama Step 1”, you already downloaded

gemma3:270m. You can check by looking at the list of models currently on your device by clicking on the “Delete a model” drop-down list.If you want to download another model, you can continue the next step!

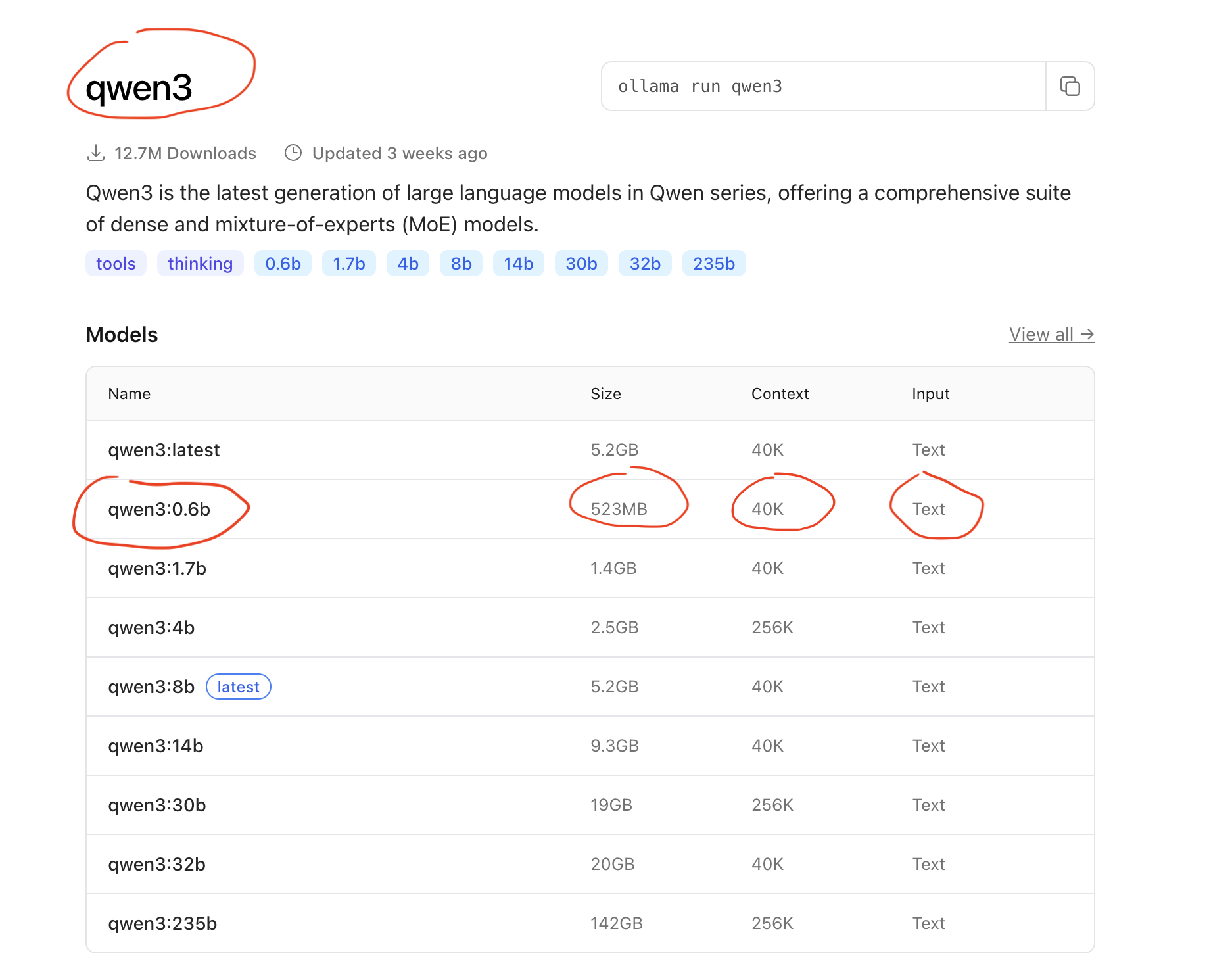

Search for model

Qwenor click hereClick on

qwen3Hover your mouse over the 0.6B model or

qwen3:0.6b- Note the size of the model: 523MB

Note the model tag:

qwen3:0.6b.Other smaller models to try

qwenfamily of modelssmollmphifamily of modelsdeepseek-r1:1.5b- llama3.2 1b and 3b

gemmagemma1 and gemma 2

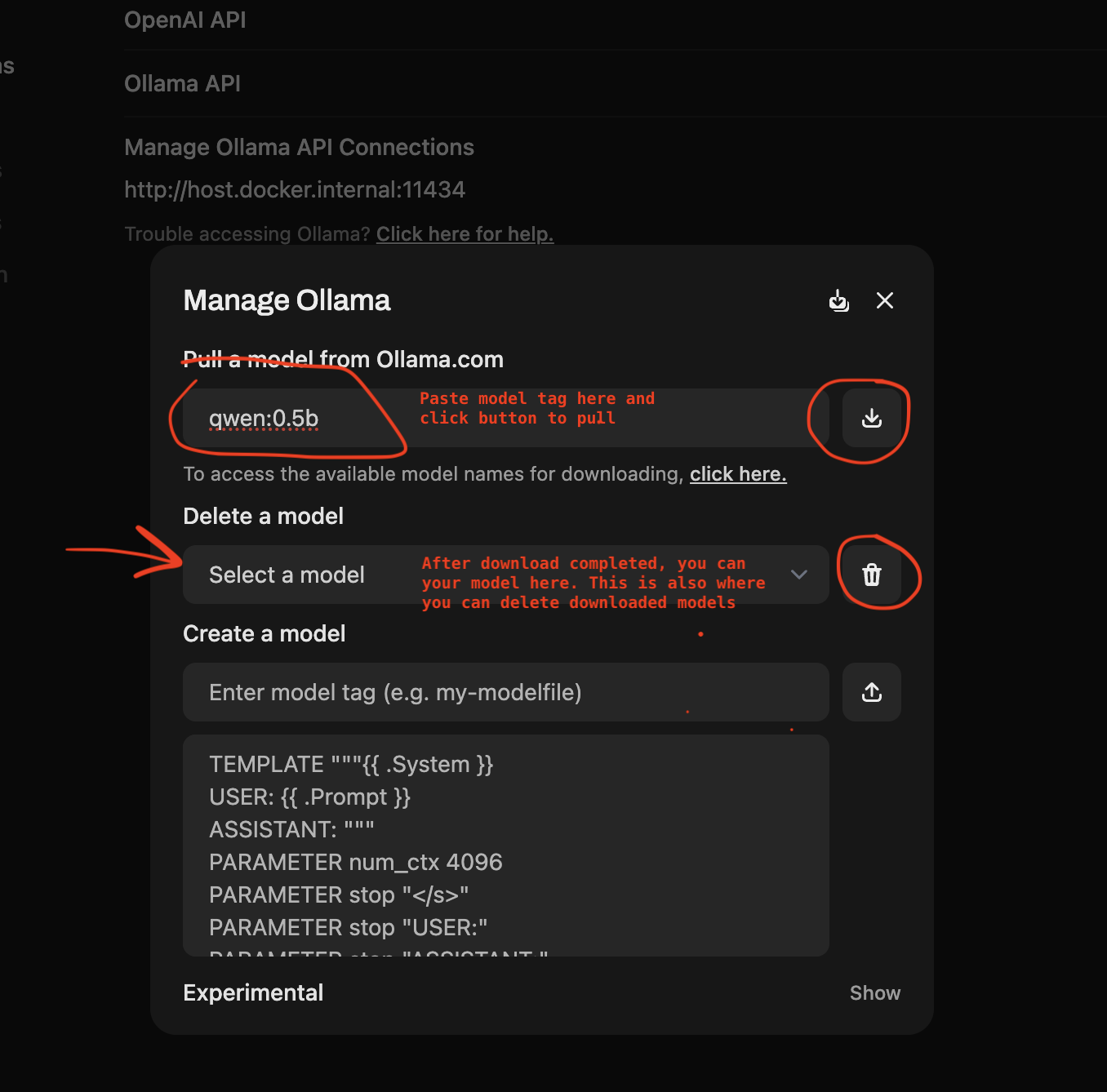

Step 5: Copy the model tag from Ollama website into OpenWEB UI

- Copy and paste

qwen3:0.6binto OpenWEB UI - Wait for the download to complete.

- After it is done, you should be able to see the model under “Delete a model”. This is also where you can come to delete the model when you do not need it.

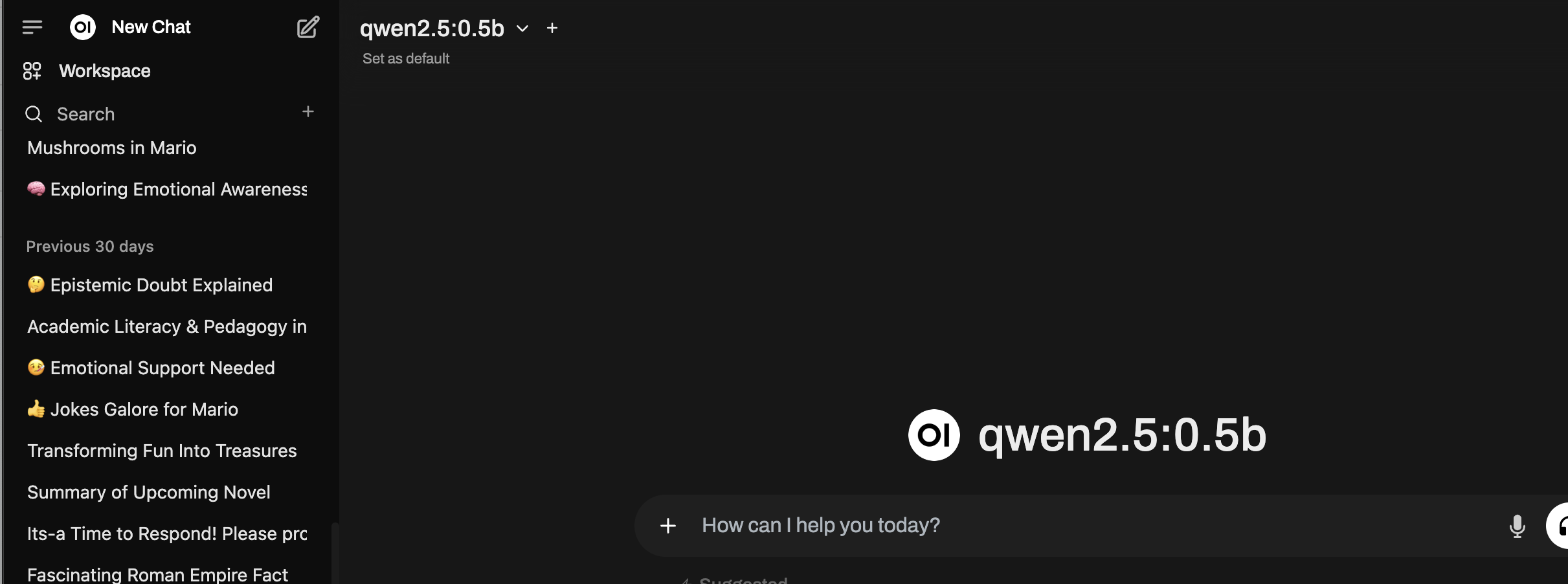

Step 6: Try the model by starting a new chat

Return back to main interface

Click “New chat” on the left panel.

Check that you are using

qwen3:0.6bmodel on the top.Select the model that you have downloaded.

If everything is ok, try a slightly bigger but still a small LLM model:

- llama3.2 (1GB)

- Or try the NEW model in town DeepSearch R1 DeepSearch R1: 1.5GB

Other smaller models to try

qwenfamily of modelssmollmphifamily of modelsdeepseek-r1:1.5b- llama3.2 1b and 3b

gemmagemma1 and gemma 2